📌 Introduction

The rapid evolution of generative AI is reshaping industries, enabling businesses to automate creative tasks, improve efficiency, and enhance user experiences. At the core of this revolution is a robust infrastructure layer 🏗️, which provides the computational power and technological foundation necessary to develop, train, and deploy large-scale AI models 🤖. This article explores the key infrastructure components that support the generative AI ecosystem and how they influence the AI value chain.

🔄 The Evolution of Digital Production Models

Historically, digital production models were structured around linear OS layers 📟, where hardware, software, and applications followed a sequential processing framework. However, with the rise of AI and machine learning, this model has shifted towards an inference ecosystem 🧠, where AI-driven workflows continuously refine and optimize outputs. The inference ecosystem is characterized by the following:

Real-time adaptability ⚡ – AI systems can infer patterns dynamically rather than following pre-programmed logic.

Data-driven decision-making 📊 – AI models refine outputs based on live data, enhancing efficiency.

Decentralized Computing ☁️ – AI workloads are distributed across cloud, edge, and local environments to optimize processing power.

This transition necessitates reimagining AI infrastructure, emphasizing scalability, efficiency, and computational power across the generative AI stack.

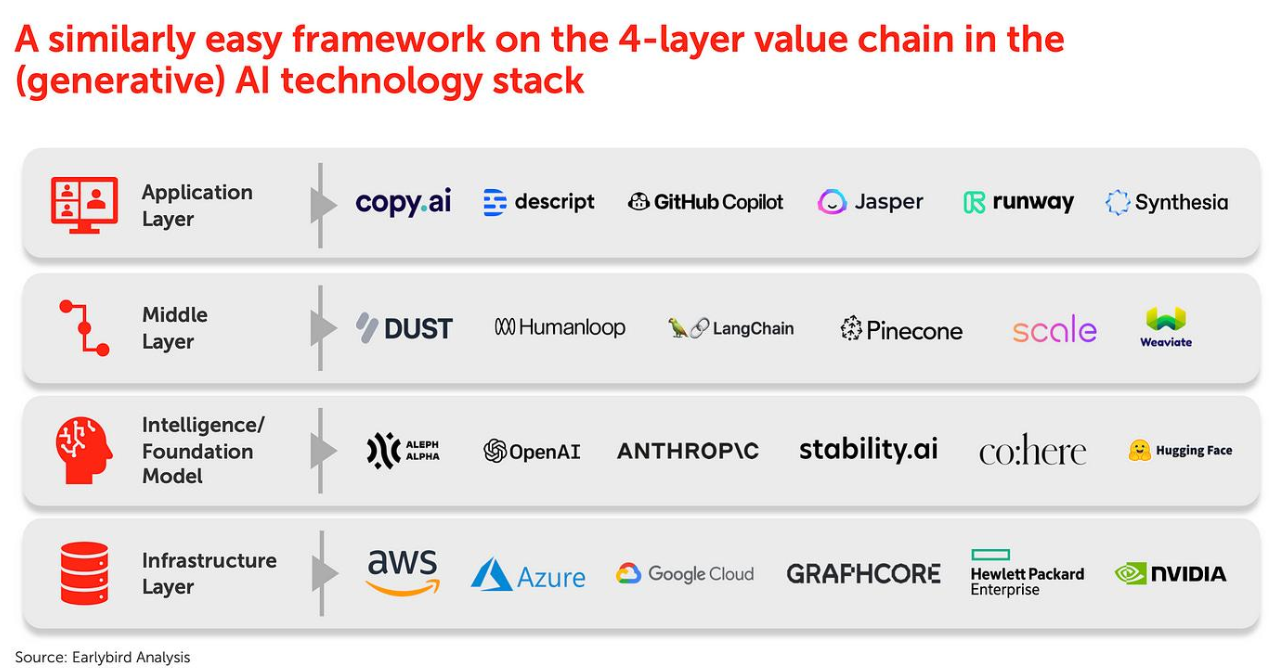

🏗️ The 4-Layer Generative AI Stack

Examining the broader AI technology stack is essential to understand the significance of infrastructure. The generative AI value chain consists of four interdependent layers:

Infrastructure Layer – 🏭 The foundation for AI computation and data storage.

Intelligence/Foundation Model Layer – 🧠 Organizations building foundational AI models.

Middle Layer – 🔗 Tools and frameworks enabling AI utilization.

Application Layer – 🎨 End-user AI-powered solutions.

This article will focus on the Infrastructure Layer, the backbone of AI operations.

☁️ 1. Cloud Computing Providers

Cloud computing platforms are crucial for hosting and scaling AI models. Generative AI models require immense computational resources, which cloud providers supply through high-performance data centers and scalable infrastructure. Leading players in this space include:

Amazon Web Services (AWS) 🌍 – Offers AI-focused services such as SageMaker, EC2 instances with GPUs, and AI-dedicated infrastructure.

Microsoft Azure 🔵 – Provides AI supercomputing capabilities with AI-optimized virtual machines and scalable data solutions.

Google Cloud 🌤️ – Specializes in AI/ML workloads through Tensor Processing Units (TPUs) and Vertex AI.

Why Cloud Computing Matters

Scalability 📈 – Enables businesses to adjust AI workloads dynamically based on demand.

Cost-Efficiency 💰 – Reduces the need for expensive on-premise infrastructure.

Accessibility 🔓 – Democratizes AI by offering services to startups and enterprises alike.

🎛️ 2. Specialized AI Hardware

Training and deploying generative AI models require specialized hardware optimized for deep learning. The primary hardware providers include:

NVIDIA 🎮 – Market leader in GPUs (e.g., A100, H100) tailored for AI training and inference.

Graphcore ⚡ – Develops Intelligence Processing Units (IPUs) to accelerate AI workloads.

Hewlett Packard Enterprise (HPE) 🏢 – Provides AI-optimized hardware solutions for enterprise applications.

Importance of AI Hardware

Increased Processing Power ⚙️ – Enhances training speed and inference efficiency.

Energy Efficiency 🔋 – Reduces power consumption while optimizing AI performance.

Advanced AI Capabilities 🚀 – Supports larger, more complex AI models.

🗄️ 3. Data Storage and Management

Generative AI relies on vast amounts of data, necessitating robust storage solutions. Key infrastructure providers offer:

Databases 📚 – AI-specific databases like Weaviate, Pinecone, and Scale optimize vector search and retrieval.

Object Storage 🎛️ Services like AWS S3, Google Cloud Storage, and Azure Blob Storage efficiently store massive datasets.

Distributed Computing 🔗 – Apache Spark facilitates parallel processing for AI model training.

Why Data Storage is Critical

Efficient Data Access 🚀 – Ensures AI models can quickly retrieve and process large datasets.

Security & Compliance 🔒 – Meets industry regulations for sensitive AI applications.

Optimized Performance 🎯 – Enables real-time AI model operations.

🔌 4. AI-Oriented Networking & Compute Optimization

Efficient networking and optimization strategies enhance AI performance:

High-Speed Networking 🚅 – Providers like NVIDIA and Mellanox offer high-bandwidth solutions to accelerate AI workloads.

Edge Computing 🌎 – Distributed AI inference at the edge improves real-time processing.

Federated Learning 🔗 – Decentralized AI training enhances privacy and data efficiency.

Networking Benefits for AI

Reduces Latency ⏳ – Enhances response times for AI-driven applications.

Enables Large-Scale AI Training 🏋️ – Supports multi-node AI computations.

Optimizes Costs 💰 – Reduces cloud expenditures through optimized networking.

🔮 The Future of AI Infrastructure

As generative AI advances, infrastructure Innovation will be crucial to overcoming computational bottlenecks. Future trends include:

Quantum Computing ⚛️ – Could revolutionize AI training speeds.

AI-Specific Chips 🖥️ – Custom processors designed explicitly for AI workloads.

Sustainable AI Computing 🌿 – Energy-efficient AI data centers to reduce carbon footprints.

🏁 Conclusion

The infrastructure layer is the backbone of the generative AI ecosystem, enabling companies to develop, train, and deploy powerful AI models. Cloud computing, specialized AI hardware, data storage, and high-speed networking all play a vital role in scaling AI capabilities. As demand for generative AI grows, infrastructure innovations will continue to shape the future of artificial intelligence.

🎯 Key Takeaways:

Cloud computing platforms ☁️ (AWS, Azure, Google Cloud) provide scalable AI resources.

Specialized AI hardware 🎮 (NVIDIA, Graphcore, HPE) optimizes AI model performance.

Data storage solutions 🗄️ (Weaviate, Pinecone, Scale) ensure efficient AI data processing.

High-speed networking 🚀 enhances AI training and inference capabilities.

Businesses investing in generative AI must prioritize robust infrastructure 🏗️ to remain competitive in the evolving AI landscape.