Tech Analysis

Introduction

Digital production models have undergone significant transformations, moving from the static, hierarchical structure of the traditional Operating System (OS) model to the dynamic and scalable design of the Inference Ecosystem for AI. These shifts are not merely technological; they reflect broader economic, cultural, and industrial changes, aligning with society's growing need for adaptability, scalability, and innovation. This analysis delves into the evolution of these models, their implications for infrastructure growth, and potential trajectories for the next big wave of digital transformation. 🌐

The OS model, as illustrated in the linear hierarchy diagram, has been foundational in computing. It comprises four distinct layers:

Strengths:

Limitations:

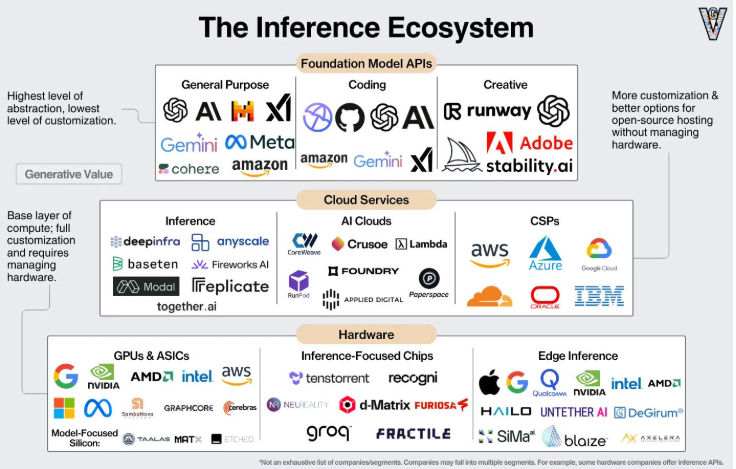

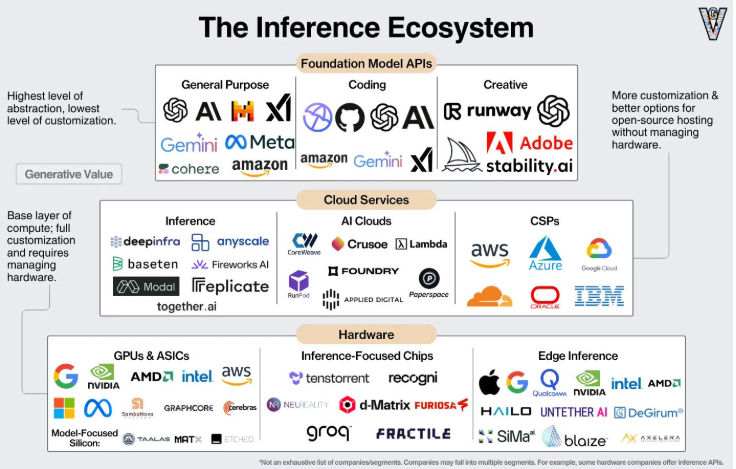

The Inference Ecosystem, a modern approach to digital infrastructure, enables AI-driven workflows across distributed systems. It represents a fundamental shift in digital production, integrating multiple layers of abstraction and functionality:

Key Points:

| Economic Impact | Example | Metric |

|---|---|---|

| AI Democratization | OpenAI API enabling startups | 100,000+ API developers |

| Cost Efficiency | AWS Lambda (pay-as-you-go model) | $0.000016 per request |

| Global Reach | Google Cloud’s global infrastructure | 35 regions worldwide |

| Technological Innovation | Example | Metric |

|---|---|---|

| Specialized Hardware | NVIDIA’s A100 GPU | 20x performance improvement |

| Edge Computing | AWS Greengrass (IoT AI at the edge) | 99.9% reduced latency |

The evolution from the OS model to the Inference Ecosystem represents a paradigm shift...